Vision vs. MCP: The Architecture War Shaping Autonomous AI Agents

In the emerging world of autonomous AI, two approaches are defining how agents “do” things: traditional API-driven pipelines and a new wave of vision-powered assistants. But which scales better – and what happens when we combine them?

The Two Paradigms of Action: MCP vs. Vision-Based

AI agents are rapidly evolving from chatbots to fully autonomous task-executors. In this transformation, two distinct architectural paradigms have emerged:

- MCP (Model Context Protocol) – agents that operate through structured API-based integrations.

- Vision-Based Agents – agents that interact with software visually, using screen perception and simulated inputs, like a human assistant would.

While MCP agents are more traditional and efficient in structured environments, vision-based agents are radically expanding the agent’s domain to legacy, non-API, and GUI-only tools.

TL;DR:

- MCP/API agents = speed + structure

- Vision-based agents = flexibility + universality

- Hybrid agents = the future

Definitions

MCP (Model Context Protocol)

AI agents that invoke external tools or services via APIs, function calls, or plugins. These systems orchestrate tasks by routing structured calls to various tools.

- Example: GPT calling a calendar API to schedule a meeting.

Vision-Based Agents (VTAMs)

Agents that see and manipulate software UIs using a combination of vision models and simulated inputs (mouse clicks, keystrokes).

- Example: An AI opening Excel on-screen, navigating menus, and entering data using vision.

Side-by-Side Comparison

| Factor | MCP / API | Vision-Based |

|---|---|---|

| Integration Effort | High | None |

| Speed | High | Lower (step-by-step UI) |

| Access to Legacy Tools | No | Yes |

| Requires Developer Setup | Yes | No |

| Visual Feedback | No | Yes |

| Adaptability to UI Change | Moderate (APIs stable) | Fragile but improving |

| Reliability | High | Moderate (46–60%) |

| Transparency | Low (backend only) | High (user-visible steps) |

| User Type | Engineers | Everyone |

Sources

Benchmark Evidence: AssistGUI and More

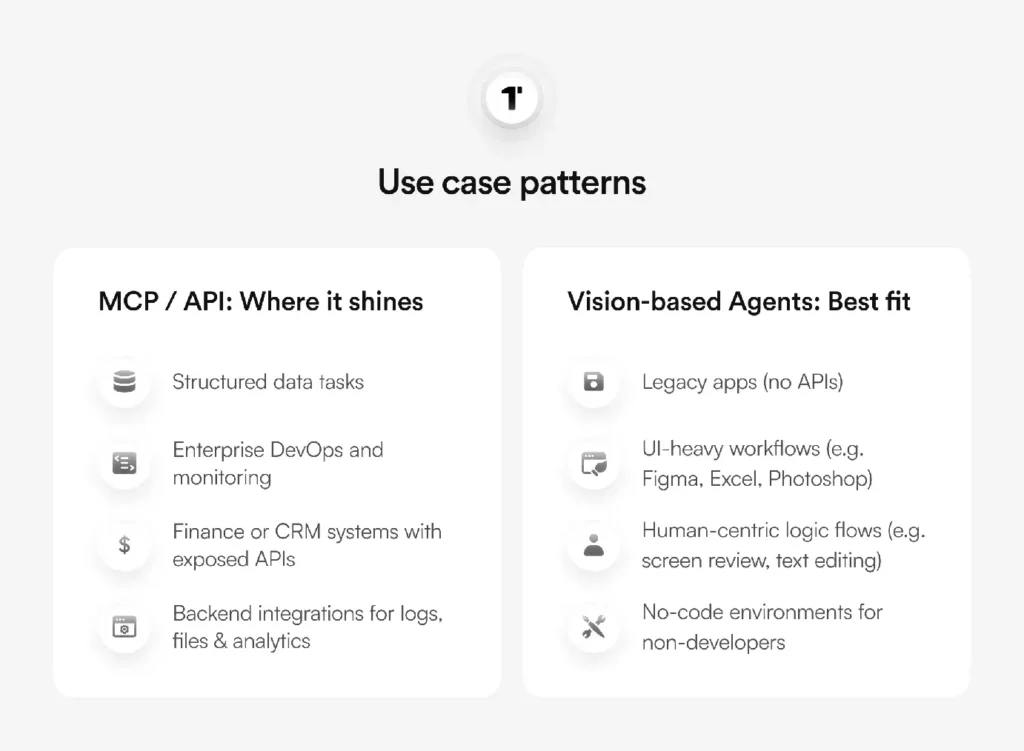

Use Case Patterns

MCP/API: Where It Shines

- Structured data tasks

- Enterprise DevOps and monitoring

- Finance or CRM systems with exposed APIs

- Backend integrations for logs, files, analytics

Vision-Based Agents: Best Fit

- Legacy apps (no APIs)

- UI-heavy workflows (e.g. Figma, Excel, Photoshop)

- Human-centric logic flows (e.g. screen review, text editing)

- No-code environments for non-developers

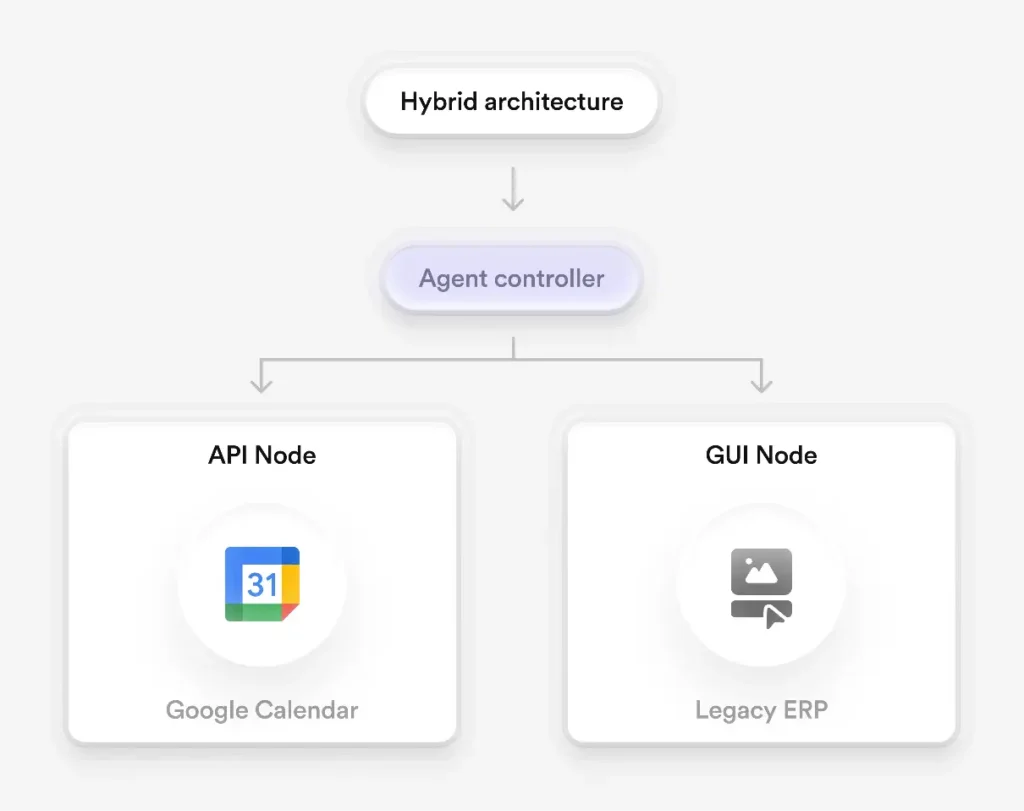

The Rise of Hybrid Architectures

Modern research and commercial tools (like OpenAI Operator and SmythOS) suggest the strongest agents will combine both paradigms:

- Use APIs when speed and precision matter

- Use vision where no integration exists or GUI visibility is required

Hybrid Models in Action

Examples of emerging hybrid approaches:

- RPA + LLM: Combine robotic process automation with GPT-4 for step reasoning (RPA vs API, Axiom 2022)

- LangChain + GUI Automation: Invoke UI workflows as fallback when tools are missing (LangChain 2024)

- Mistral Agents API: Structured agent APIs that can be extended with UI-action fallbacks (Mistral 2025)

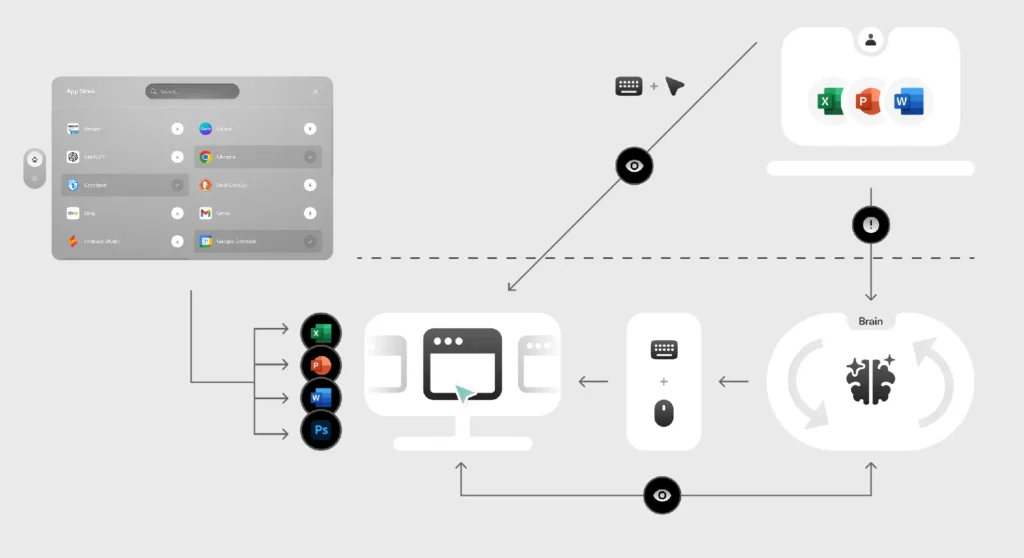

Enter Warmwind OS: A Vision-Native Operating System for Generalist Agents

While most existing vision-based systems are developed as thin wrappers or agent plugins around specific GUIs, Warmwind OS rethinks the entire execution layer from the ground up.

Rather than bolting vision onto an existing system, Warmwind is a full-stack unified operating layer designed natively for visual AI agents. It abstracts away the legacy requirement of code-based integration by simulating what a human would see and do at every level of interaction.

Core Architecture: VTAM + Visual Runtime

Warmwind uses a VTAM (Vision and Text-based Action Model)(In combination with a VLLM) as its central execution brain, capable of perceiving on-screen information in real time and interacting with traditional software via:

- Simulated mouse movements, keystrokes, and scroll events

- Real-time Image understanding of the GUI

- Step-by-step reasoning across visual environments

Instead of needing access to an API, Warmwind “opens the app” just like a user would – interacting with Gmail, Outlook, Word, Figma, Photoshop, or even custom enterprise tools with no integration effort.

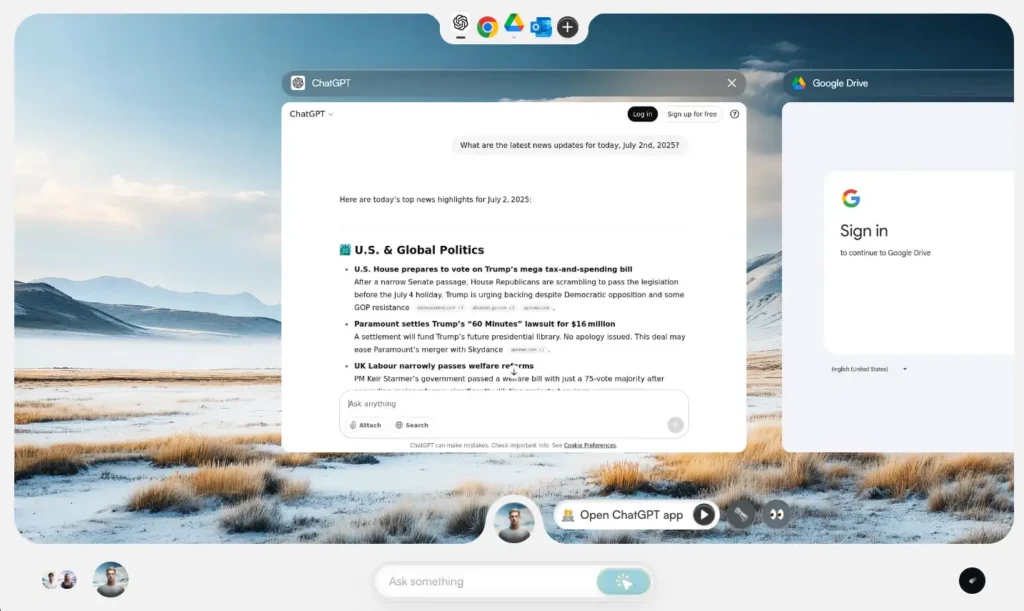

Warmwind in Action: Vision first.

Warmwind is strictly vision-based but it can also delegate specific substeps to language models or API agents via custom or GUI based apps like ChatGPT or Claude (or other more agentic tools) when reasoning or research is required.

Example:

- Content creation (news summaries, briefings) → handled by the GPT App and returned to the high bandwidth vision interface.

- PDF generation, formatting, sending via Gmail or WhatsApp → performed by Warmwind through visual interaction

This mirrors how a human would operate: ask ChatGPT for help writing, then use productivity software to polish and distribute it. Warmwind does both autonomously.

ChatGPT vs. Warmwind – Why It’s Not a Competition, But a Collaboration

Plug & Play for Any Software

Where API-based systems rely on supported backends, Warmwind needs only a screen and a cursor. This makes it compatible with:

- Old ERP systems

- Desktop-only software

- GUI-first design tools

- Custom internal dashboards

- And all other consumer applications without APIs

In other words: if a human can use it, Warmwind can use it.

Conclusion: Warmwind Bridges the Divide

Vision-based agents challenge the assumption that AI must rely on structured data and open interfaces to perform tasks. By enabling agents to see and act, Warmwind turns any software into an AI-controllable environment.

Warmwind doesn’t eliminate the need for APIs or structured pipelines – it simply makes them optional and enables fast implementations by packing these external tools into apps.

- Use API tools when available and us for speed and robustness

- Orchestrate both inside a unified runtime like Warmwind OS

In this paradigm, Warmwind is the first AI-native operating system built not for humans, but for agents.

And just like operating systems revolutionized computing by abstracting away the hardware, Warmwind is abstracting away integration, and unlocking the next generation of generalist AI.